Implementation

This section only covers working with Face Analytics in the Catalyst console. Refer to the SDKand API documentation sections for implementing Face Analytics in your application’s code.

As mentioned earlier, you can access the code templates that will enable you to integrate Face Analytics in your Catalyst application from the console, and also test the feature by uploading images with faces and obtaining the results.

Access Face Analytics

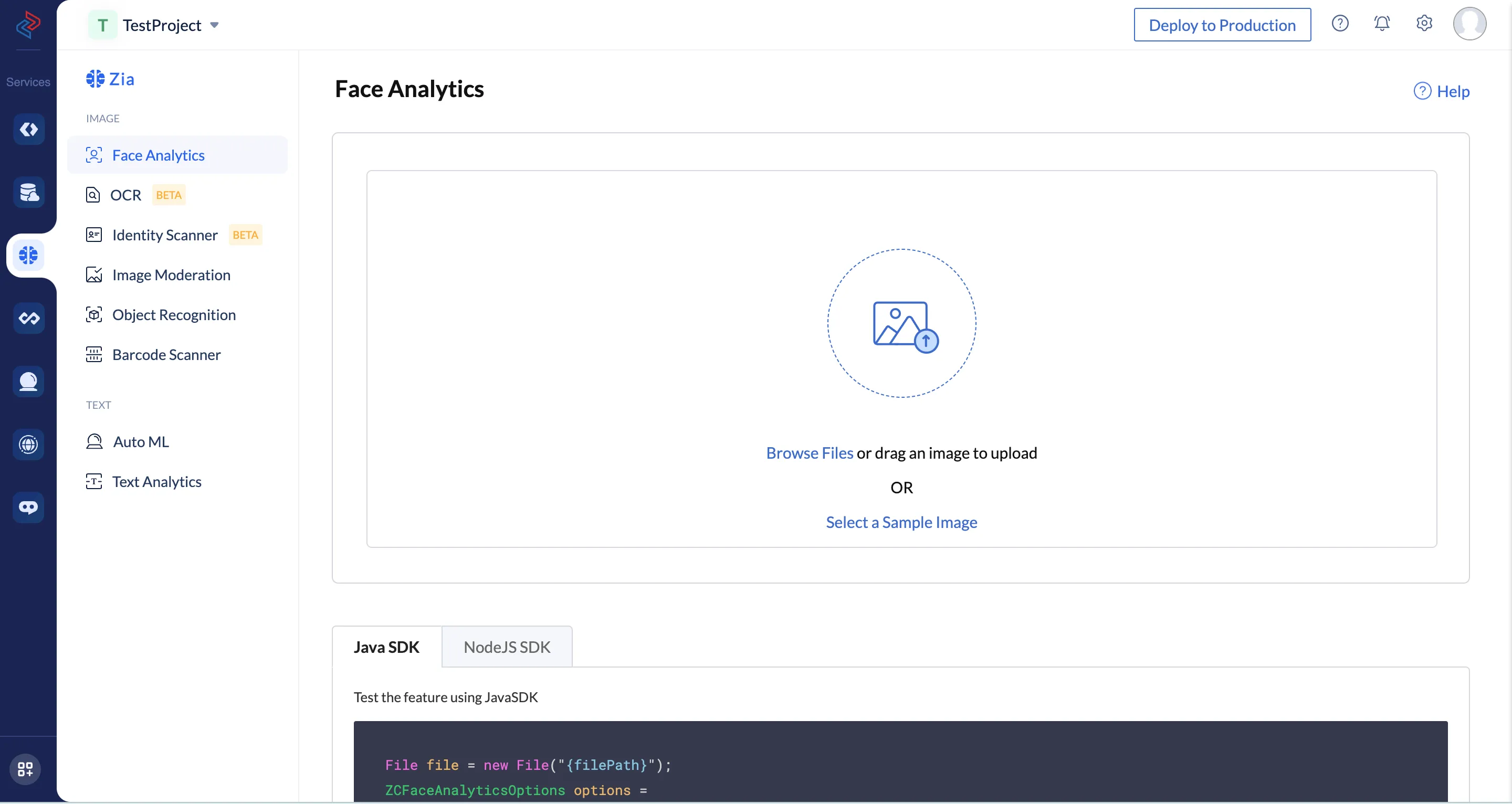

To access Face Analytics in your Catalyst console:

- Navigate to Zia Services in the left pane of the Catalyst console and click Face Analytics.

- Click Try a Demo and this will open the Face Analytics feature.

Test Face Analytics in the Catalyst Console

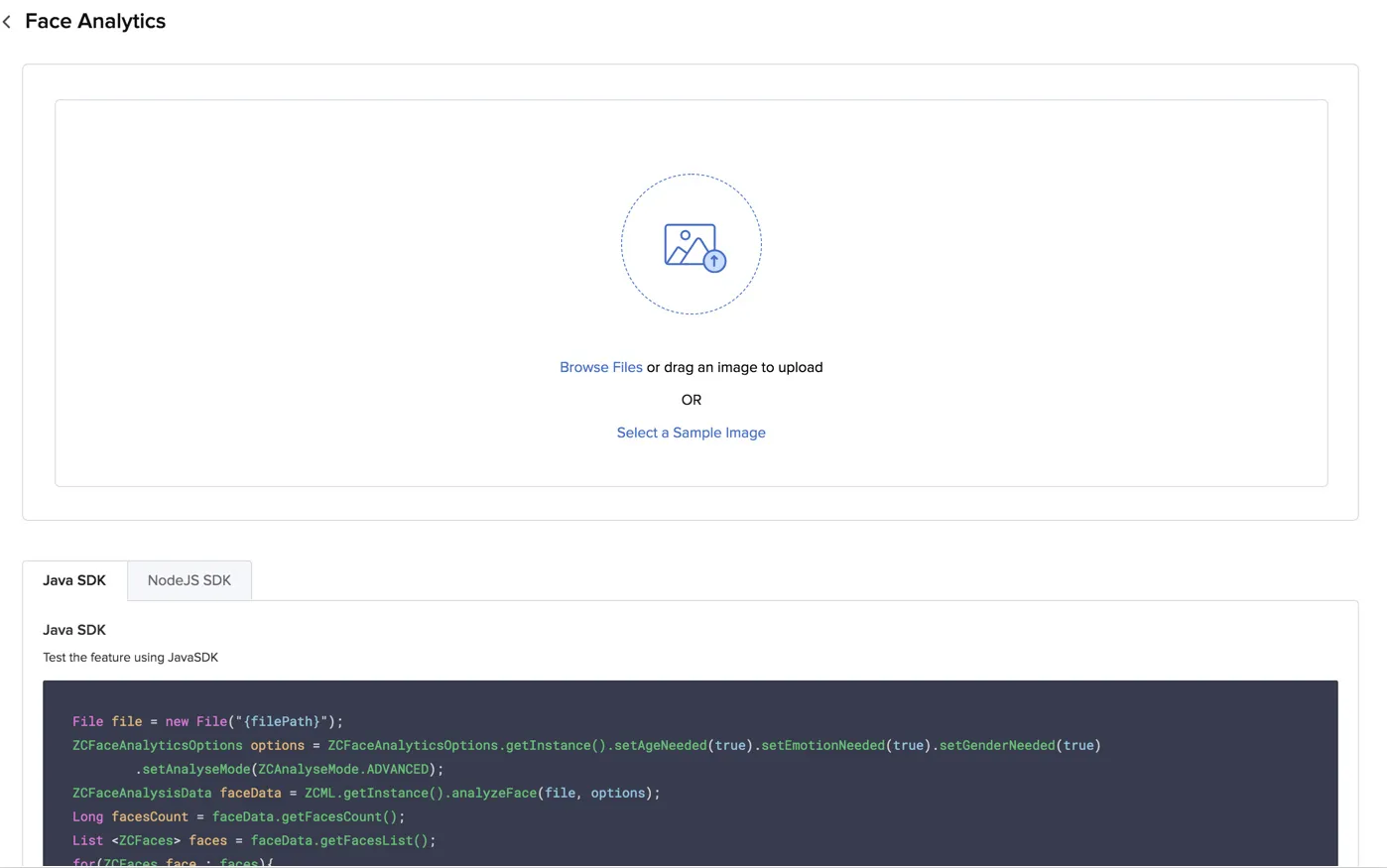

You can test Face Analytics by either selecting a sample image from Catalyst or by uploading your own image.

To scan a sample image with a face and view the result:

- Click Select a Sample Image in the box.

- Select an image from the samples provided.

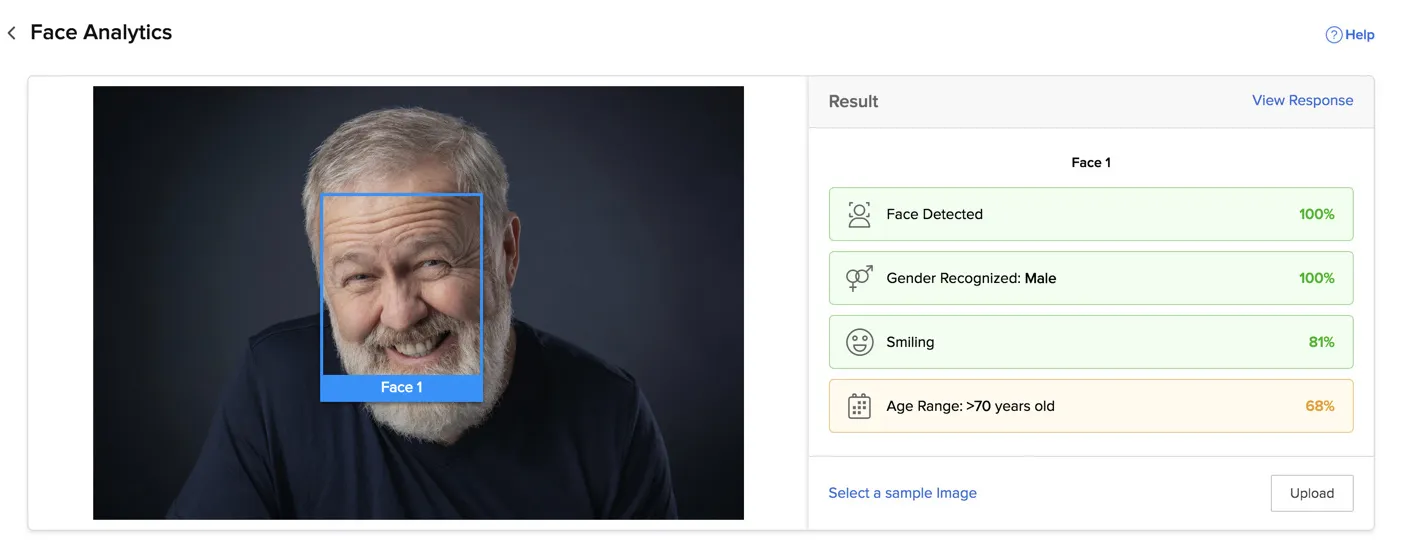

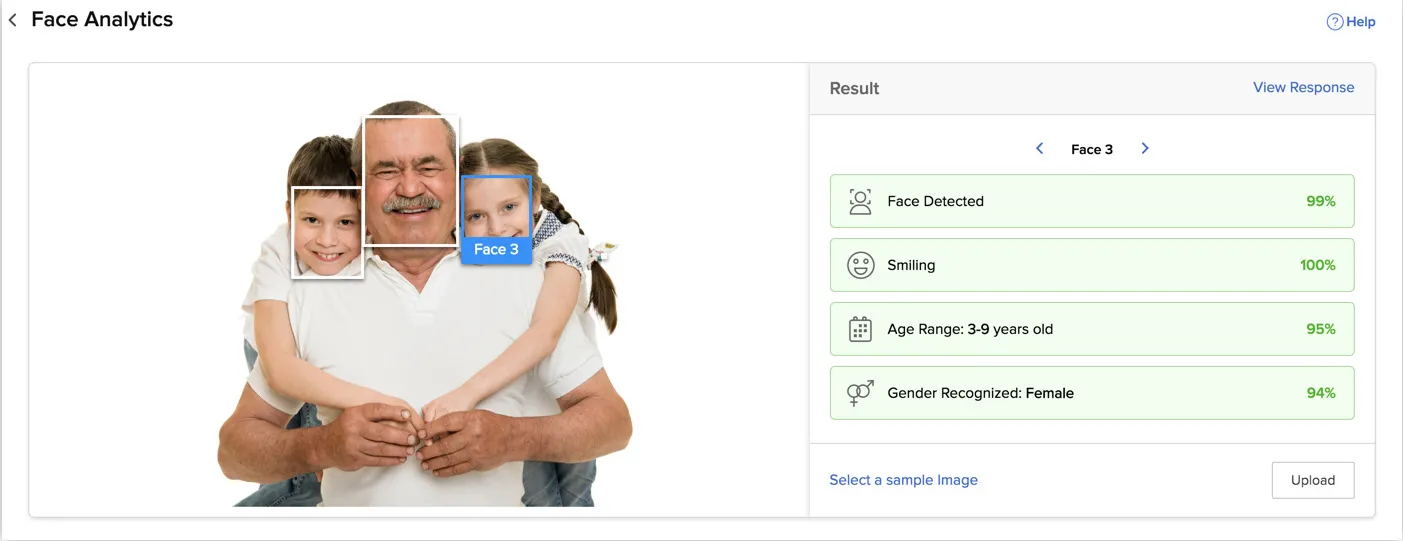

Face Analytics will scan the image for faces, analyze and predict the attributes, and provide a textual result of the analysis and the confidence level of each prediction in percentage values, for each detected face.

The colors in the response bars indicate the range of the confidence percentage of a prediction such as, red: 0-30%, orange: 30-80%, green: 80-100%.

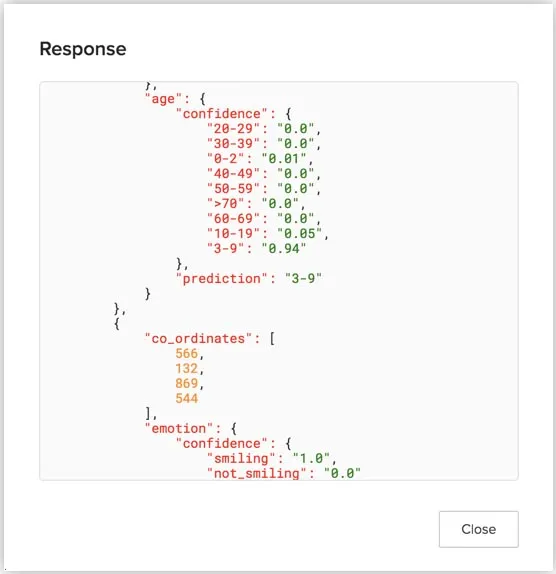

The general coordinates and the coordinates of the localized landmarks of each face are provided, in addition to the other detections, in the JSON response in the advanced landmark localization mode by default. Click View Response to view the JSON response.

You can refer to the API documentation to view a complete sample JSON response structure.

To upload your own image and test Face Analytics:

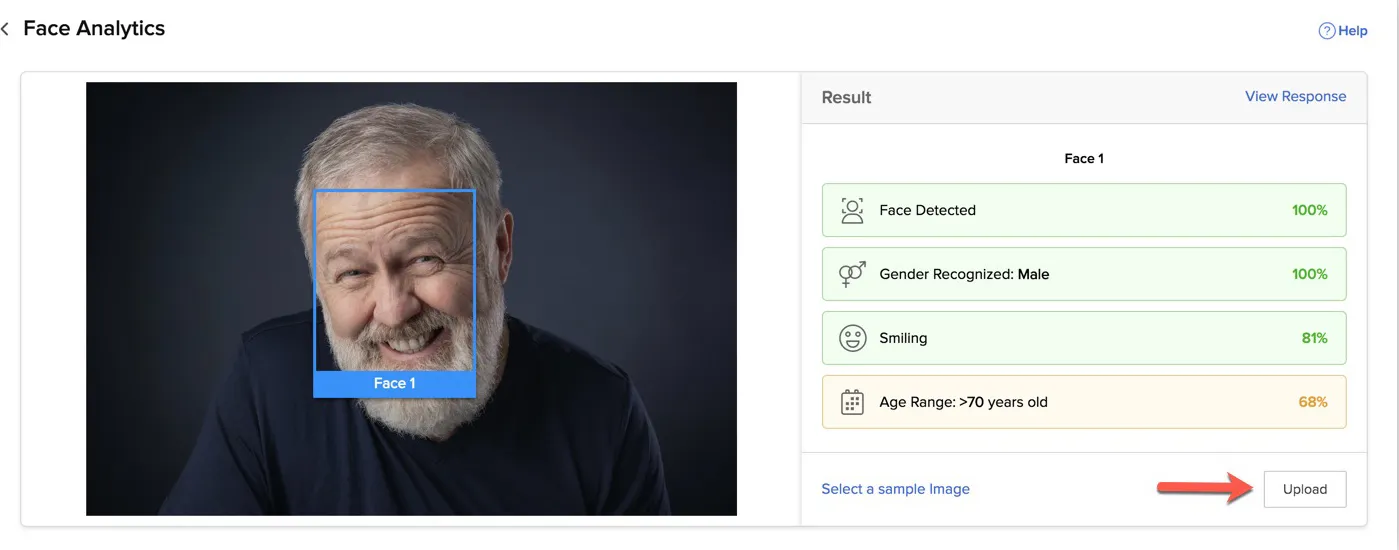

- Click Upload under the Result section.

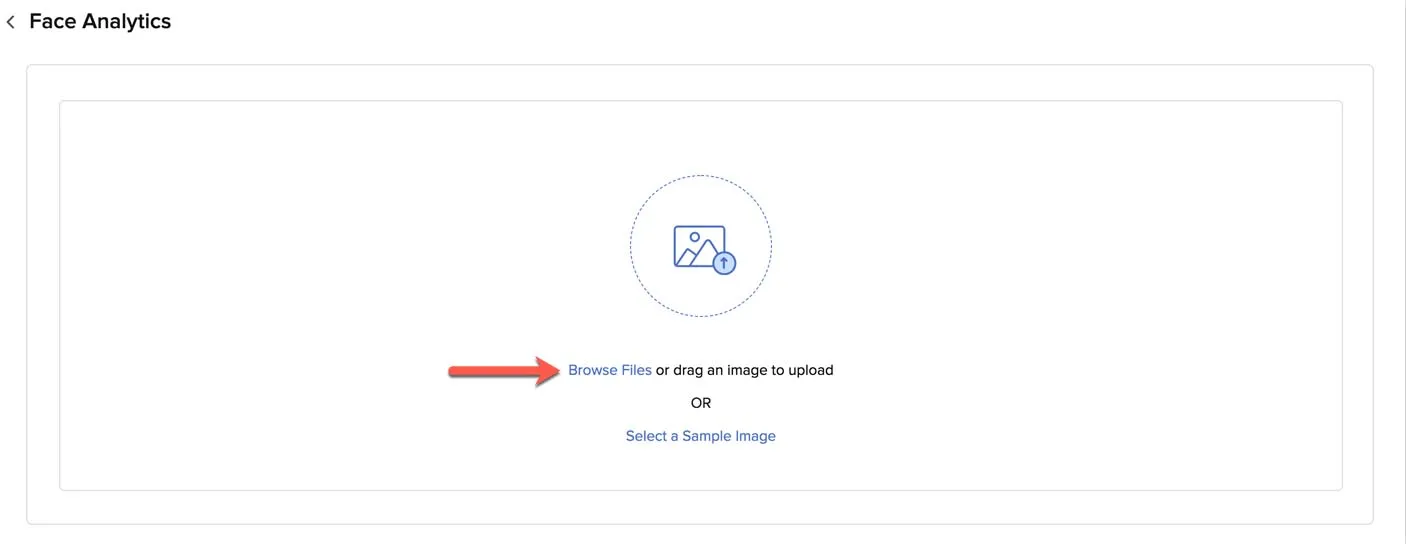

If you’re opening Face Analytics after you have closed it, click Browse Files in this box.

- Upload a file from your local system.

As mentioned earlier, Face Analytics can detect and analyze the attributes of up to 10 faces in an image. You can check the results of each detected face by clicking the side arrows. The JSON response also contains the results of each detected face.

Access Code Templates for Face Analytics

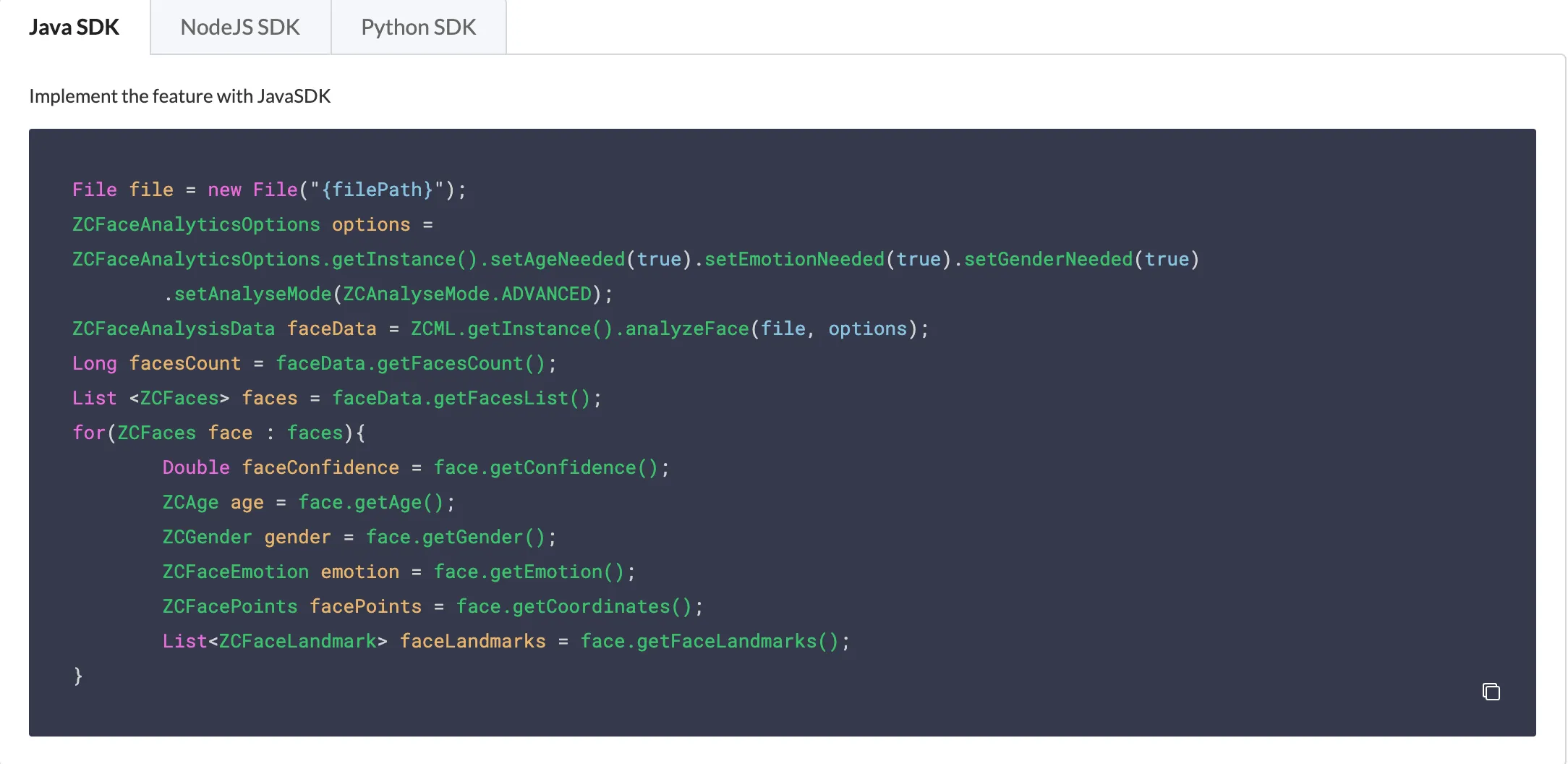

You can implement Face Analytics in your Catalyst application using the code templates provided by Catalyst for Java, Node.js and Python platforms.

You can access them from the section below the test window. Click either the Java SDK, NodeJS SDK or Python SDK tab, and copy the code using the copy icon. You can paste this code in your web or Android application’s code wherever you require.

You can process the input file as a new File in Java. The ZCFaceAnalyticsOptions module provides you with the option to enable or disable the analysis of each attribute. For example, if you set setAgeNeeded as false, Face Analytics will not provide the age prediction results for the faces detected in the image. You can also set the landmark localization mode as BASIC, MODERATE, or ADVANCED using setAnalyseMode.

In Node.js, the facePromise object is used to hold the input image file and the options set for it. You can specify the mode as basic, moderate, or advanced, and set an attribute as true or false to enable or disable the analysis for it.

In Python, the analyse_face() method accepts the input image as its argument. You can also specify the analysis mode as basic, moderate, or advanced. You can also specify the attributes age, smile, or gender as true to detect or false to not detect. These values are optional. All attributes are detected and the advanced mode is processed by default.

Last Updated 2025-02-19 15:51:40 +0530 IST

Yes

No

Send your feedback to us