Create an ML pipeline

To build the prediction model, we will use the preprocessed dataset in the ML Pipeline Builder. The initial step in building the ML Pipeline involves selecting the target column, which is the column that we are trying to predict.

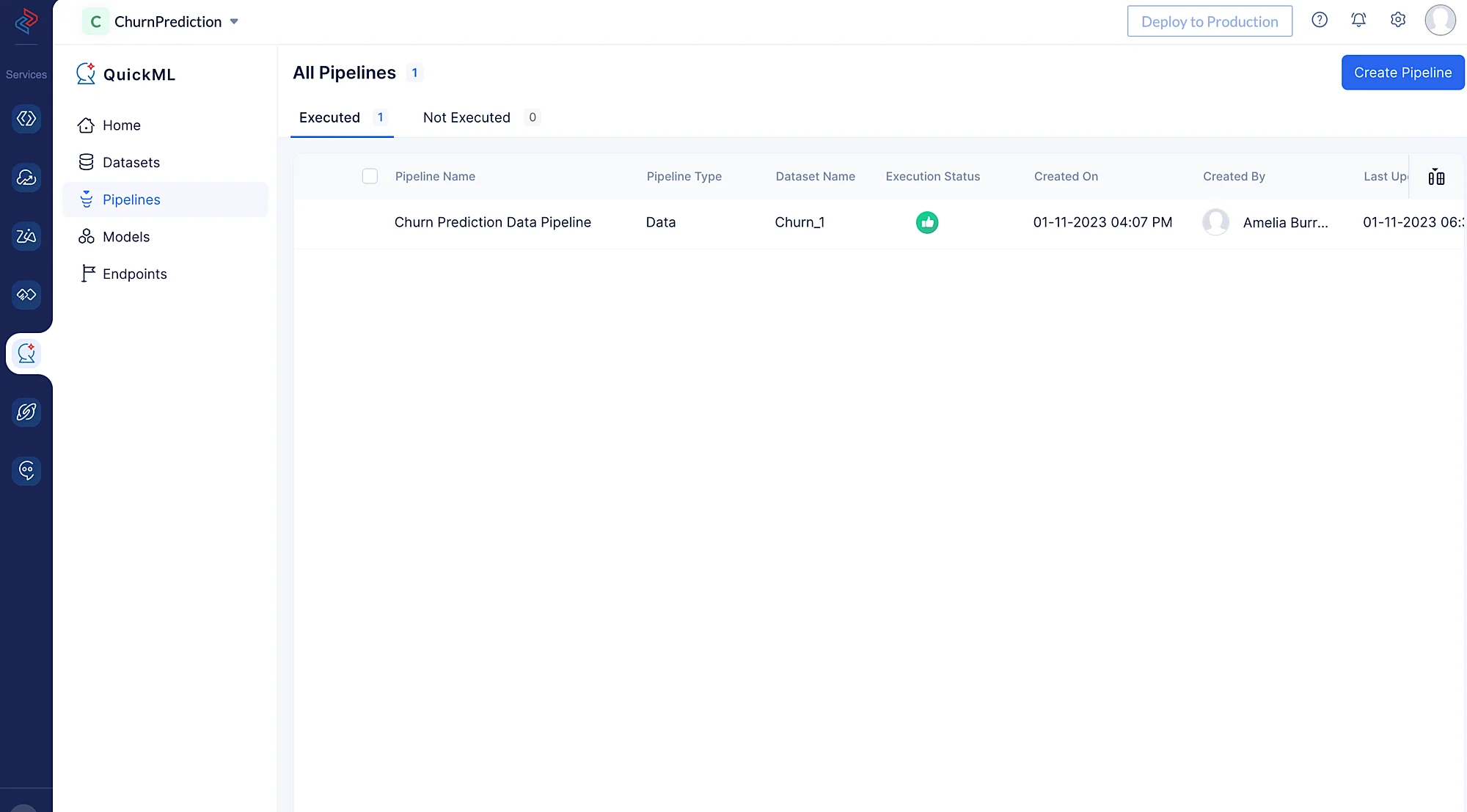

To create an ML pipeline, first Navigate to the Pipelines component and click on the Create Pipeline option.

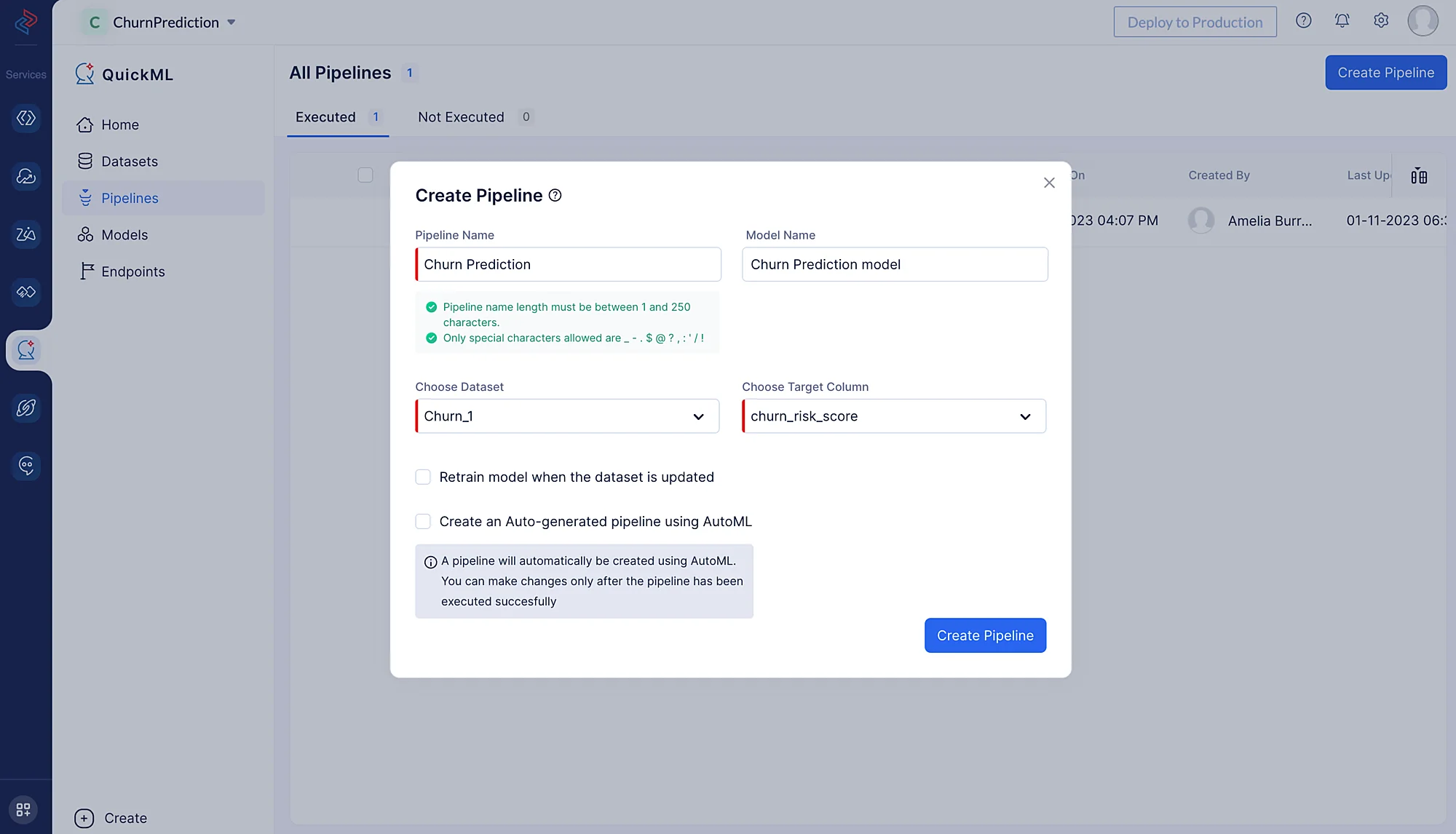

In the pop-up that appears, provide the pipeline name, we’ll Name the pipeline as Churn Prediction and the model Churn Prediction Model in the Create Pipeline pop-up. Then, select the appropriate dataset and the column name of the target.

We need to select the source dataset that is chosen for building the data pipeline, as the preprocessed data is reflected in the source dataset. In our case, we will be importing the Churn_1 dataset, as we have selected it for preprocessing and cleaning, and our target is the column named churn_risk_score.

-

Encoding categorical columns

Encoders are used in various data preprocessing and machine learning tasks to convert categorical or non-numeric data into a numerical format that machine learning algorithms can work with effectively.

-

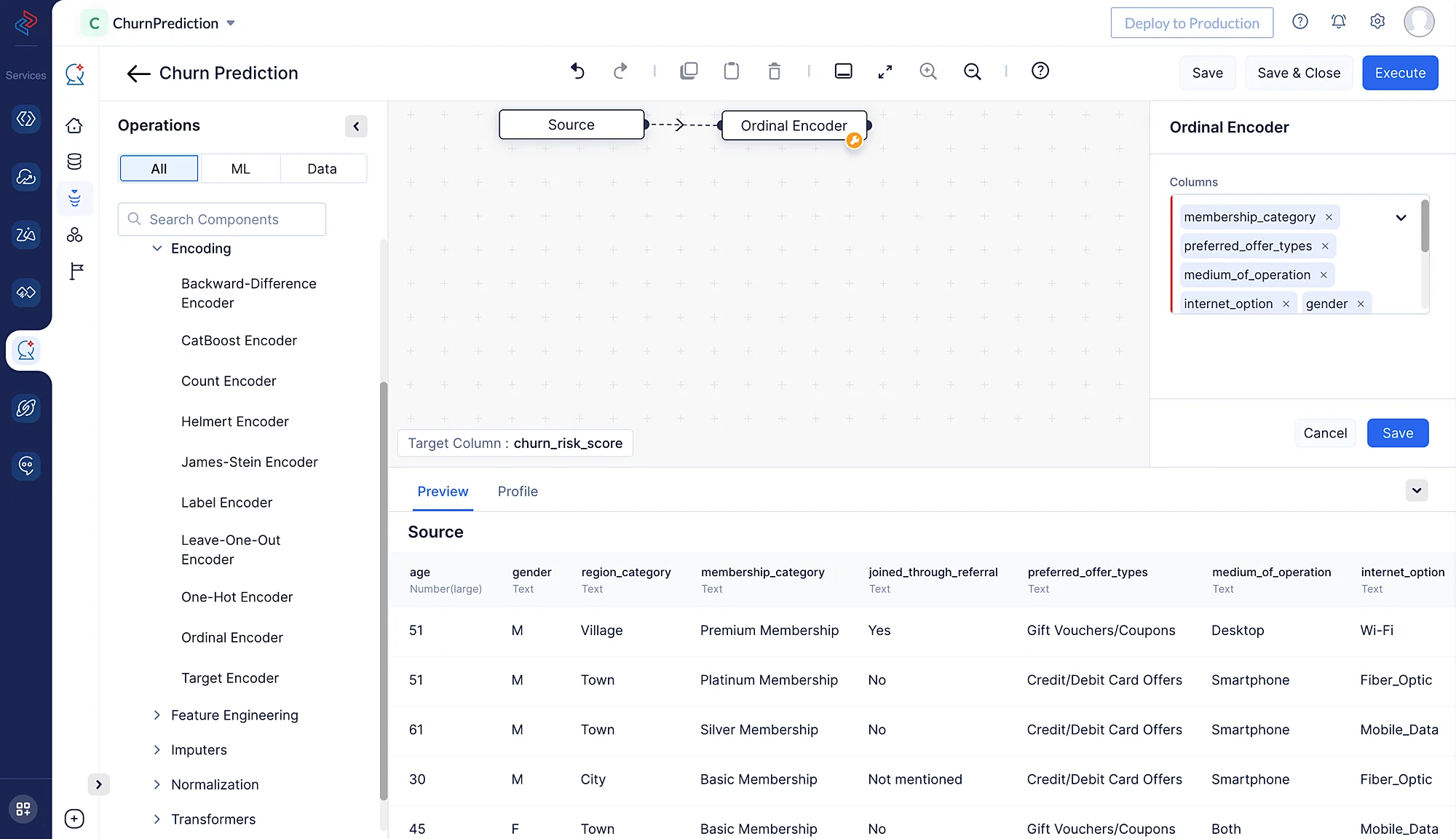

Ordinal encoding

Here we are using ordinal encoding to encode the following categorical features: “membership_category”, preferred_offer_types", “medium_of_operation”, “internet_option”, “gender”, “used_special_discount”, “past_complaints”, “complaint_status” and “feedback”. It assigns integers to the categories based on their order, making it possible for machine learning algorithms to capture the ordinal nature of the data. We’ll use the Ordinal Encoder node by navigating to ML operations, clicking the ->Encoding component, and choosing-> Ordinal Encoder in QuickML to turn the selected category columns into numerical columns.

-

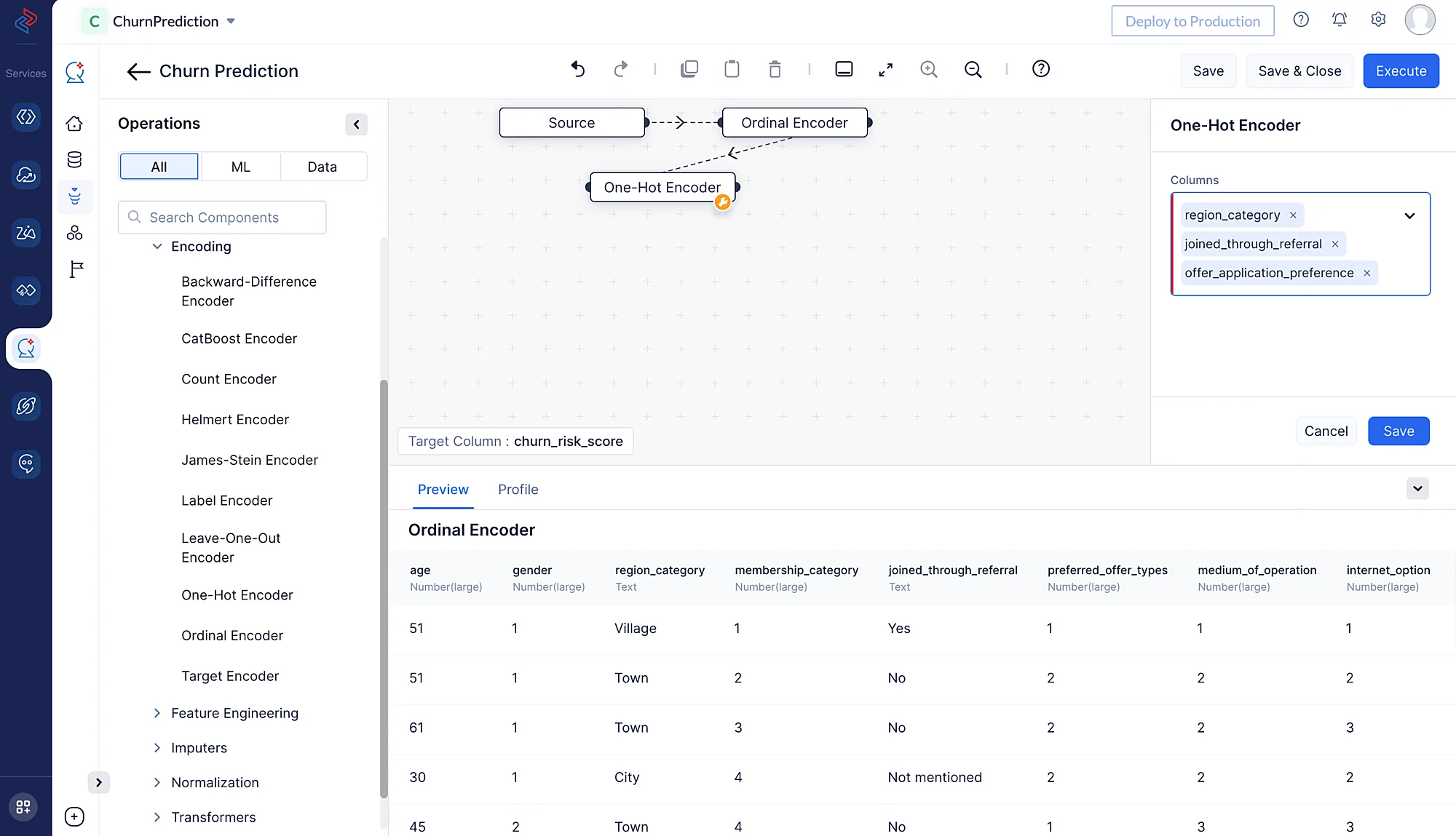

One-hot encoder

One-hot encoding is typically applied to categorical columns in a dataset, where each category represents a distinct class or group. This method typically increases the dimensionality of the dataset because it creates a new binary column for each unique category. The number of binary columns is equal to the number of unique categories minus one, as you can infer the presence of the last category from the absence of all others.

Here, we are using One-Hot Encoder node to encode the following columns: “region_category”, “joined_through_referral” and “offer_application_preference”. We’ll use the One-Hot Encoder node by navigating to ML operations, selecting the -> Encoding component and choosing -> One-Hot Encoder in QuickML to turn the selected category columns into numerical columns.

-

-

Feature Engineering:

Feature selection is the process of choosing a subset of the most relevant and important features (variables or columns) from the dataset to use in model training and analysis. The goal of feature selection is to improve the performance, efficiency, and interpretability of machine learning models. Feature selection is particularly crucial when dealing with high-dimensional datasets, as it can help reduce overfitting, reduce computation time, and enhance model interpretability.

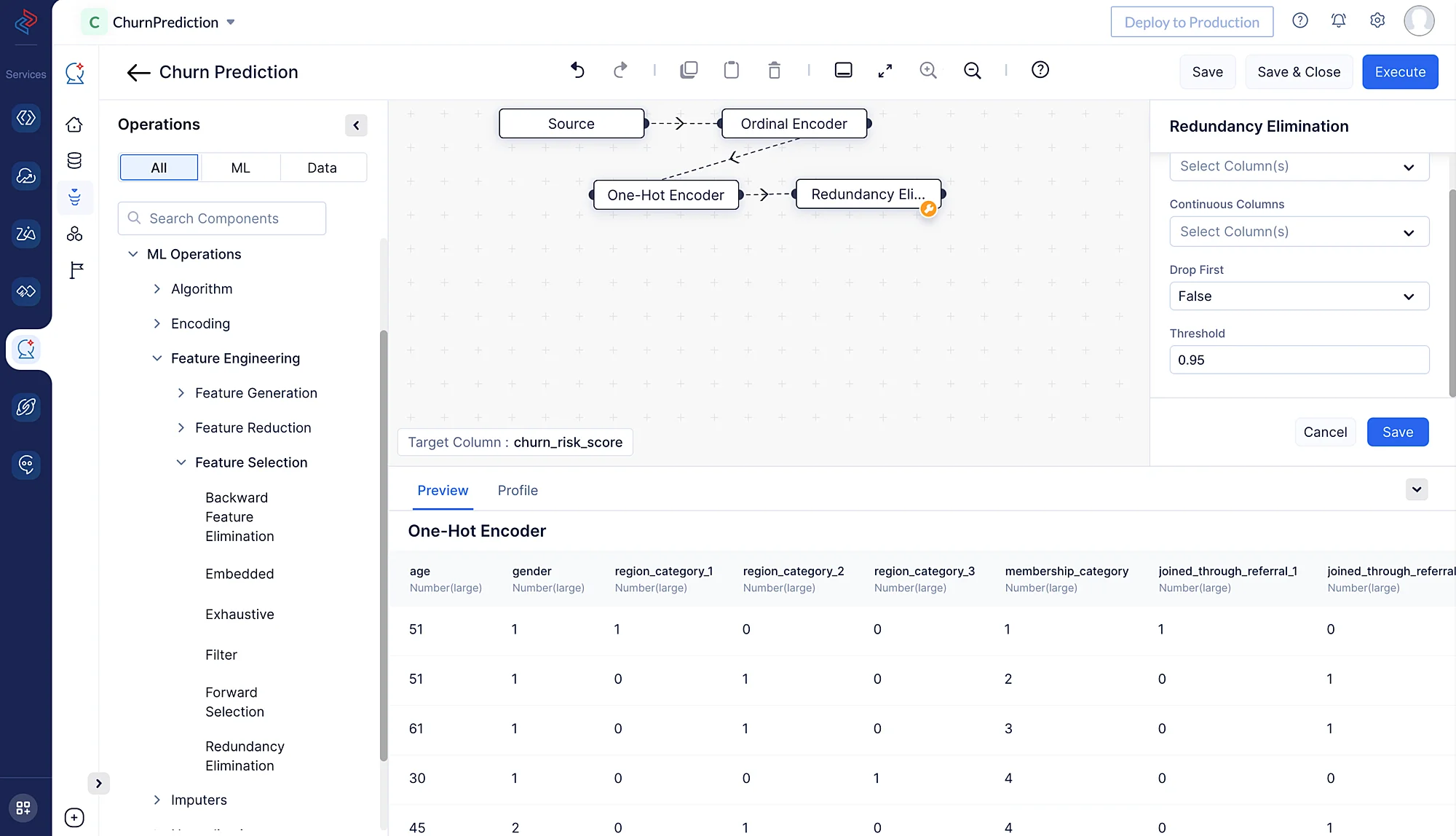

Here we are using the redundancy rlimination feature selection technique to generate the features. This method will identify and remove redundant features from a dataset. Redundant features provide duplicate or highly correlated information, and they don’t contribute significantly to improving the performance of machine learning models. Select Redundancy Elimination node by navigating to ML operations, clicking ->Feature Engineering, selecting ->Feature Selection, and choosing ->Redundancy Elimination.

-

ML Algorithm:

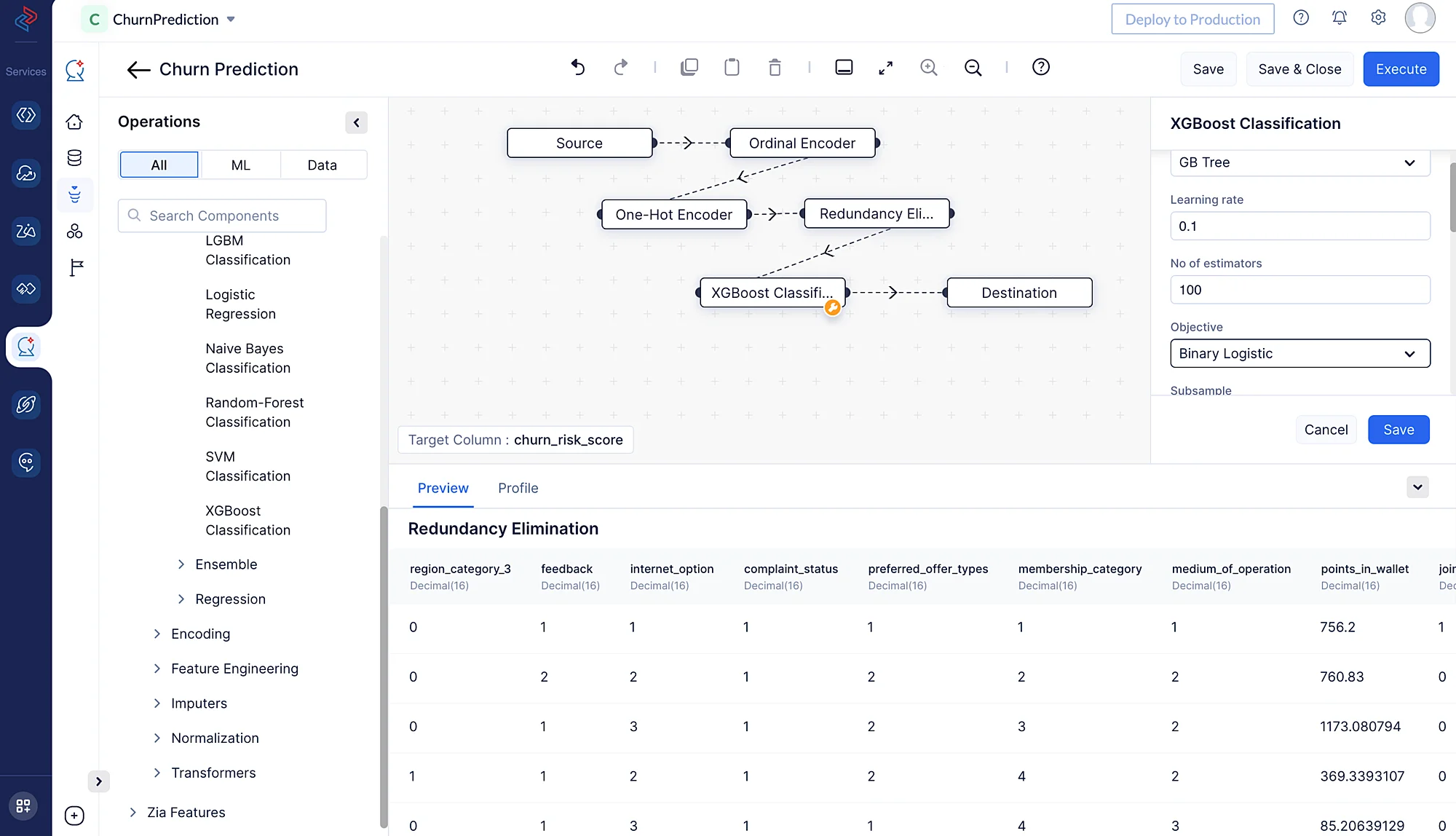

The next step in ML pipeline building is selecting the appropriate algorithm for training the preprocessed data. Here we’ll use the XGBoost classification algorithm to train the data.

XGBoost (Extreme Gradient Boosting) is a popular and powerful machine learning algorithm commonly used for classification tasks. It’s an ensemble learning method that combines the predictions of multiple decision trees to create a strong predictive model. XGBoost is known for its speed, scalability, and ability to handle complex datasets.

We can quickly construct the XGBoost Classification method in QuickML’s ML Pipeline Builder by dragging and dropping the relevant XGBoost Classification node from ML operations, selecting ->Algorithm, clicking ->Classification, and choosing ->XGBoost Classification**.

In order to make sure the model is optimized for our particular dataset, we may also adjust the tuning parameters; in our instance, we can just stick with the default settings. When everything is configured, we may save the pipeline for further testing and deployment.

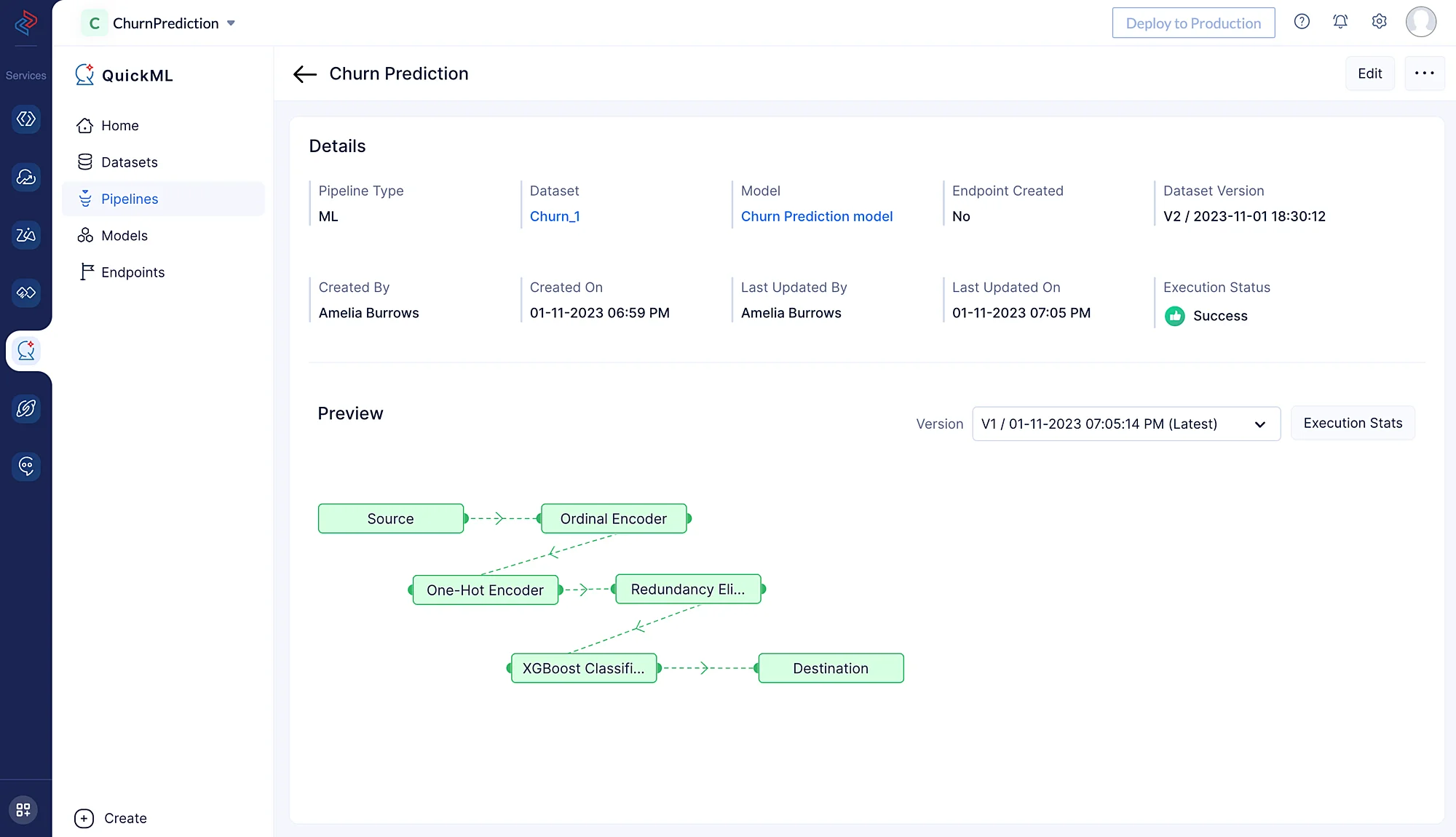

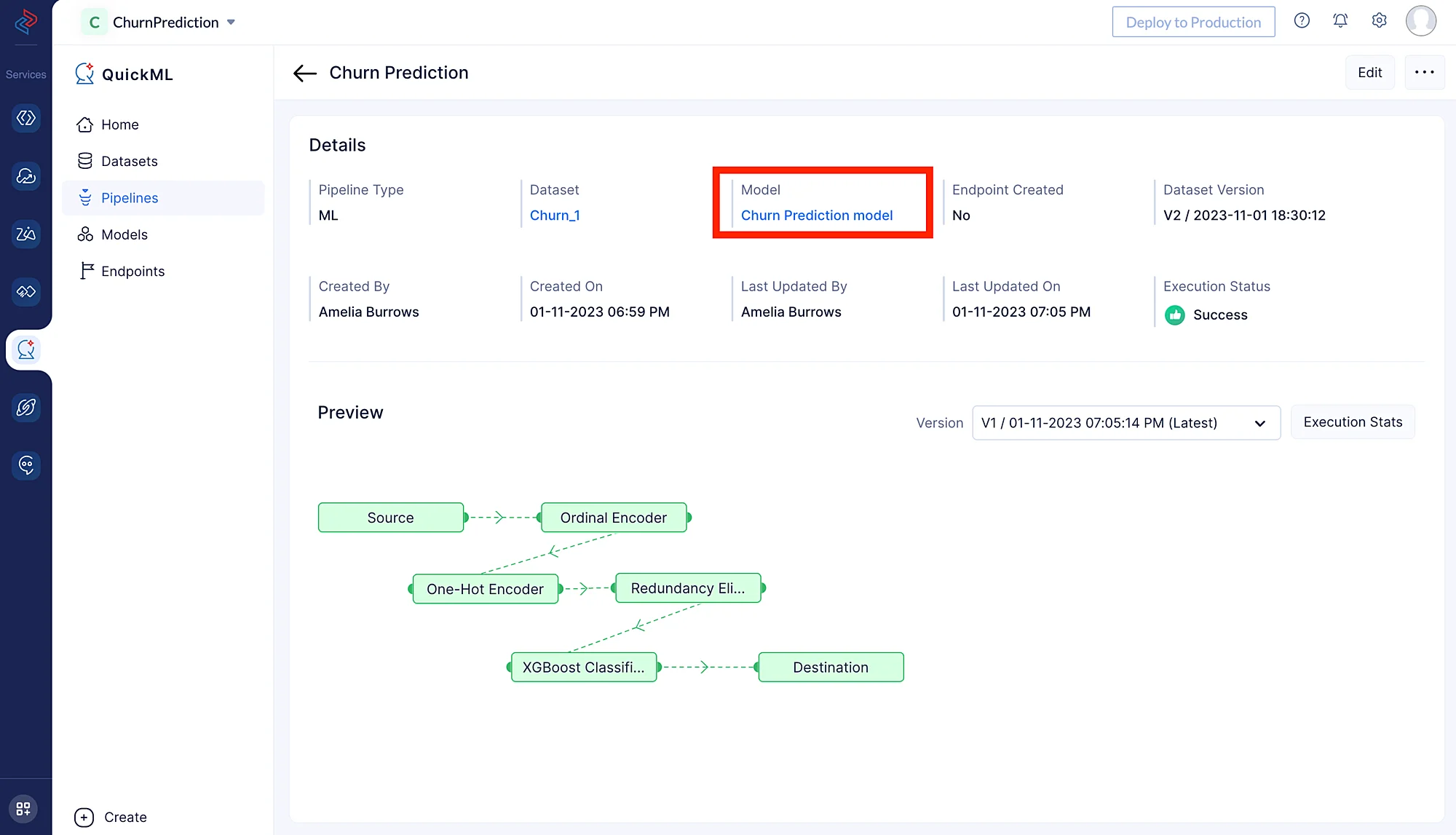

Once we drag-and-drop the algorithm node, its end node will be automatically connected to the destination node. Click Save to save the pipeline and execute the pipeline by clicking the Execute button at the top-right corner of the pipeline builder page. This will redirect you to the page below which shows the executed pipeline with execution status. We can clearly see here that the pipeline execution is successful.

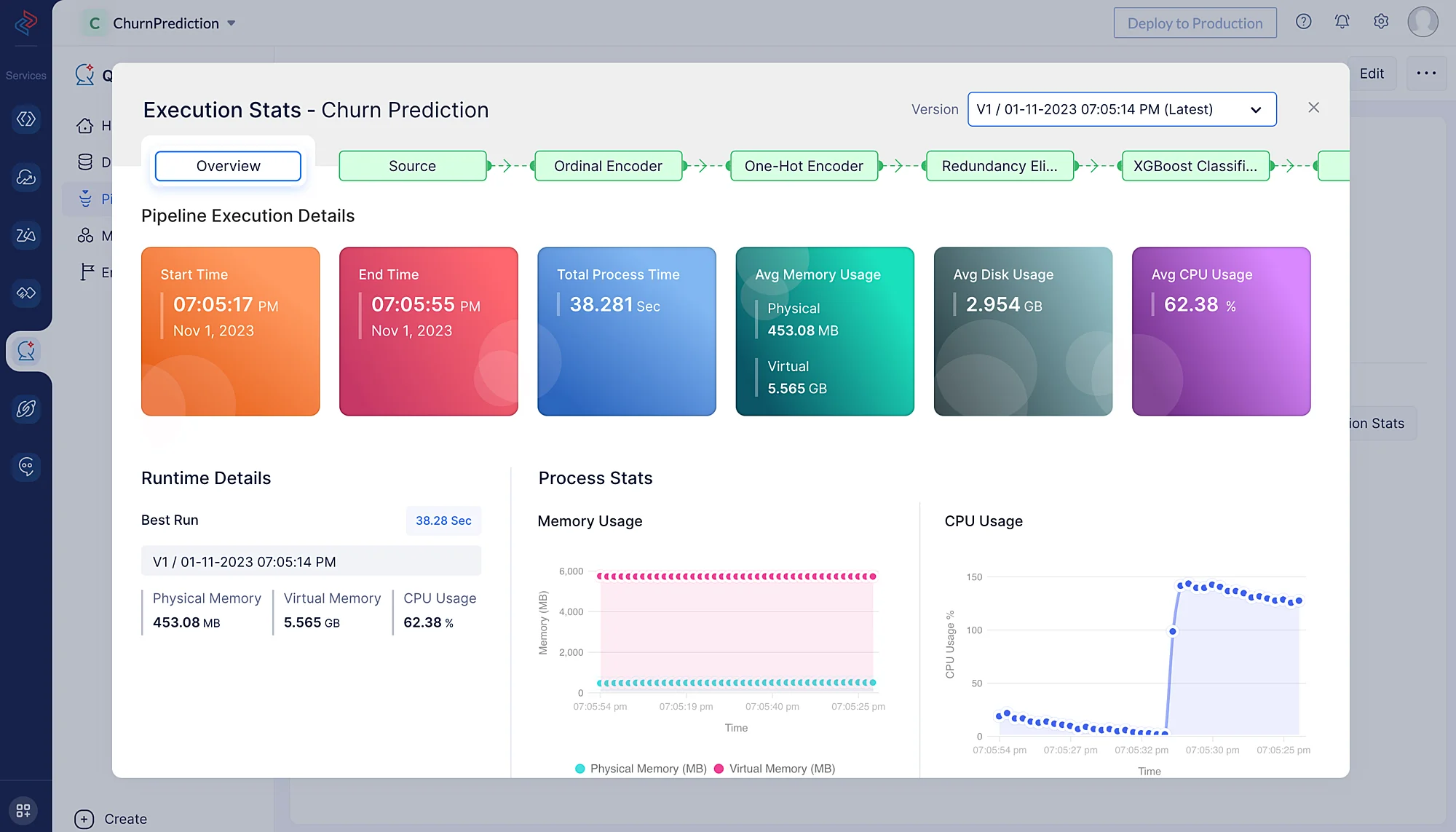

Click Execution Stats to view more compute details about each stage of the model execution in detail.

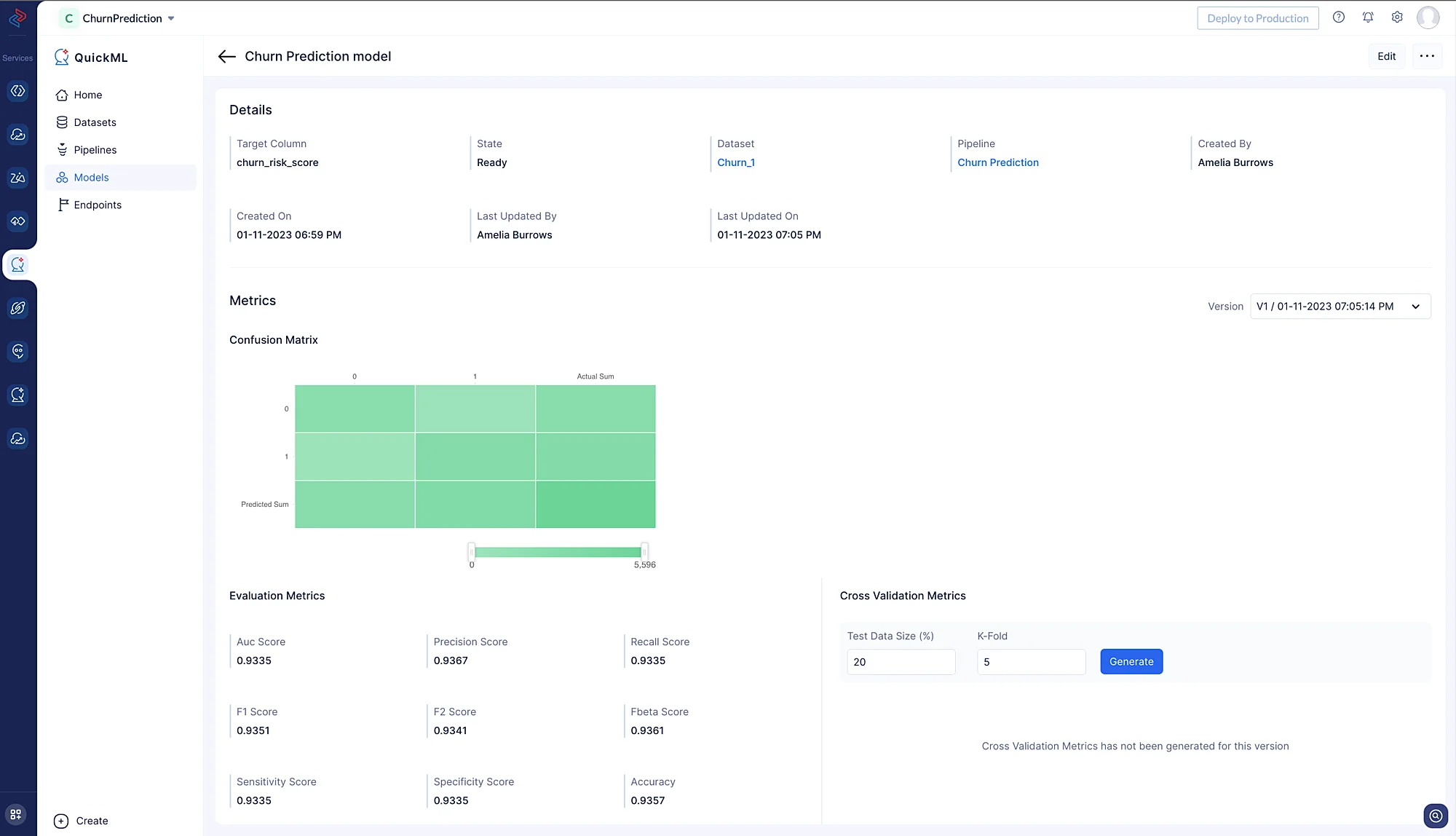

The prediction model is created and can be examined under the Model section(click on Churn Prediction model) following the successful completion of the ML workflow.

This offers useful perceptions into the efficiency and performance of the model while making predictions based on the data.

Last Updated 2025-02-19 15:51:40 +0530 IST