Create a data pipeline

Now that we have uploaded the dataset, we will proceed with creating a data pipeline with the dataset.

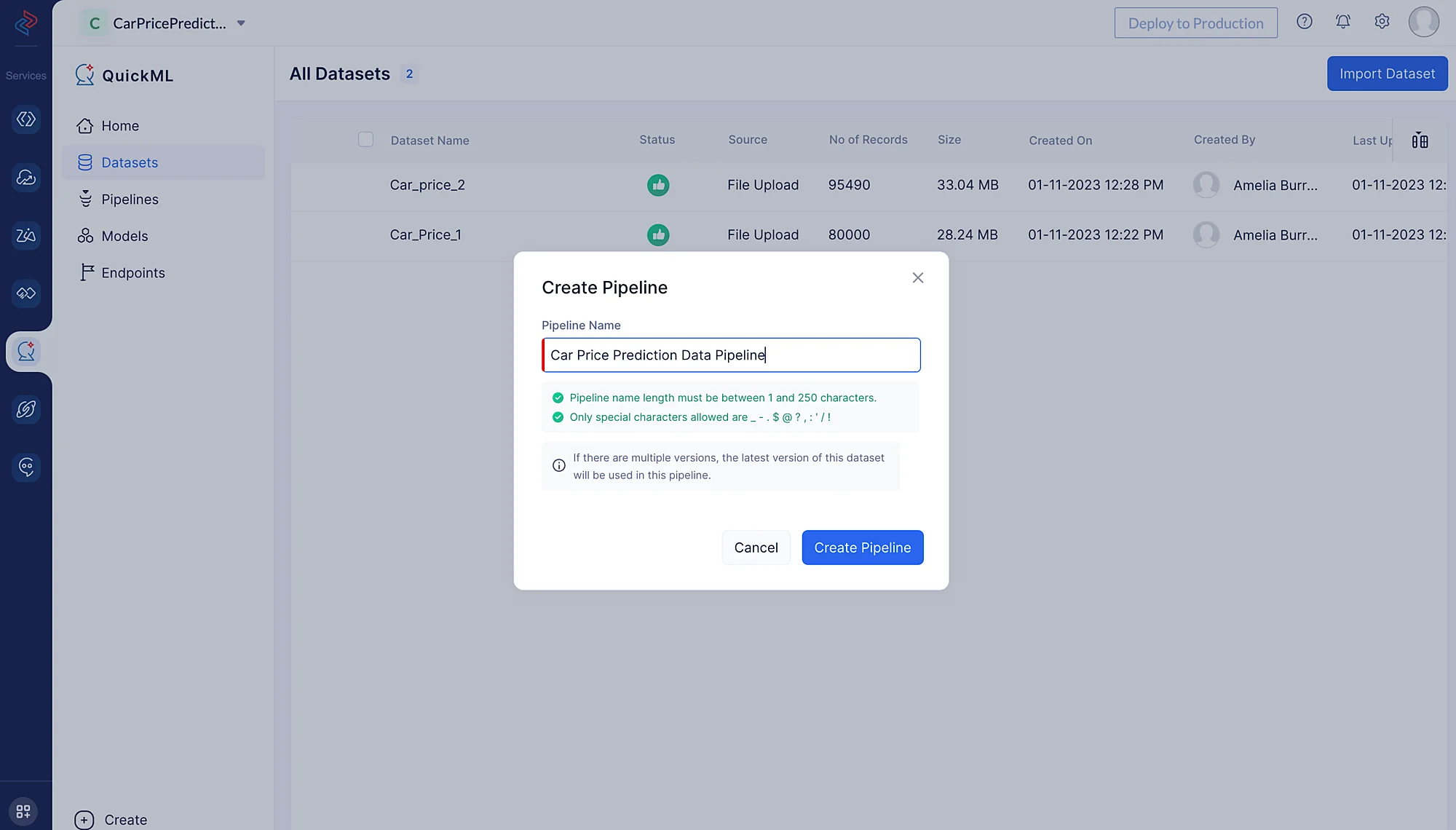

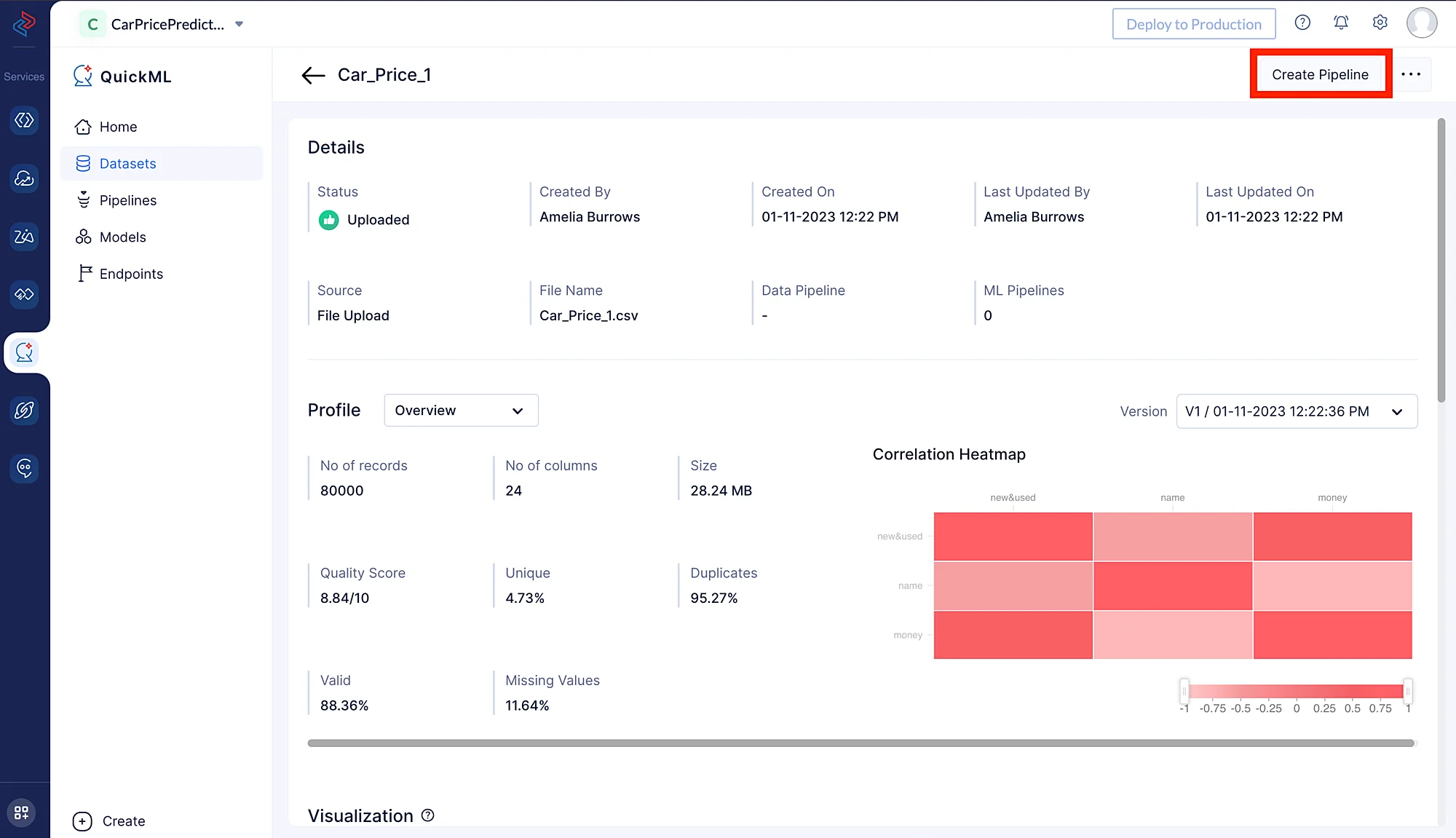

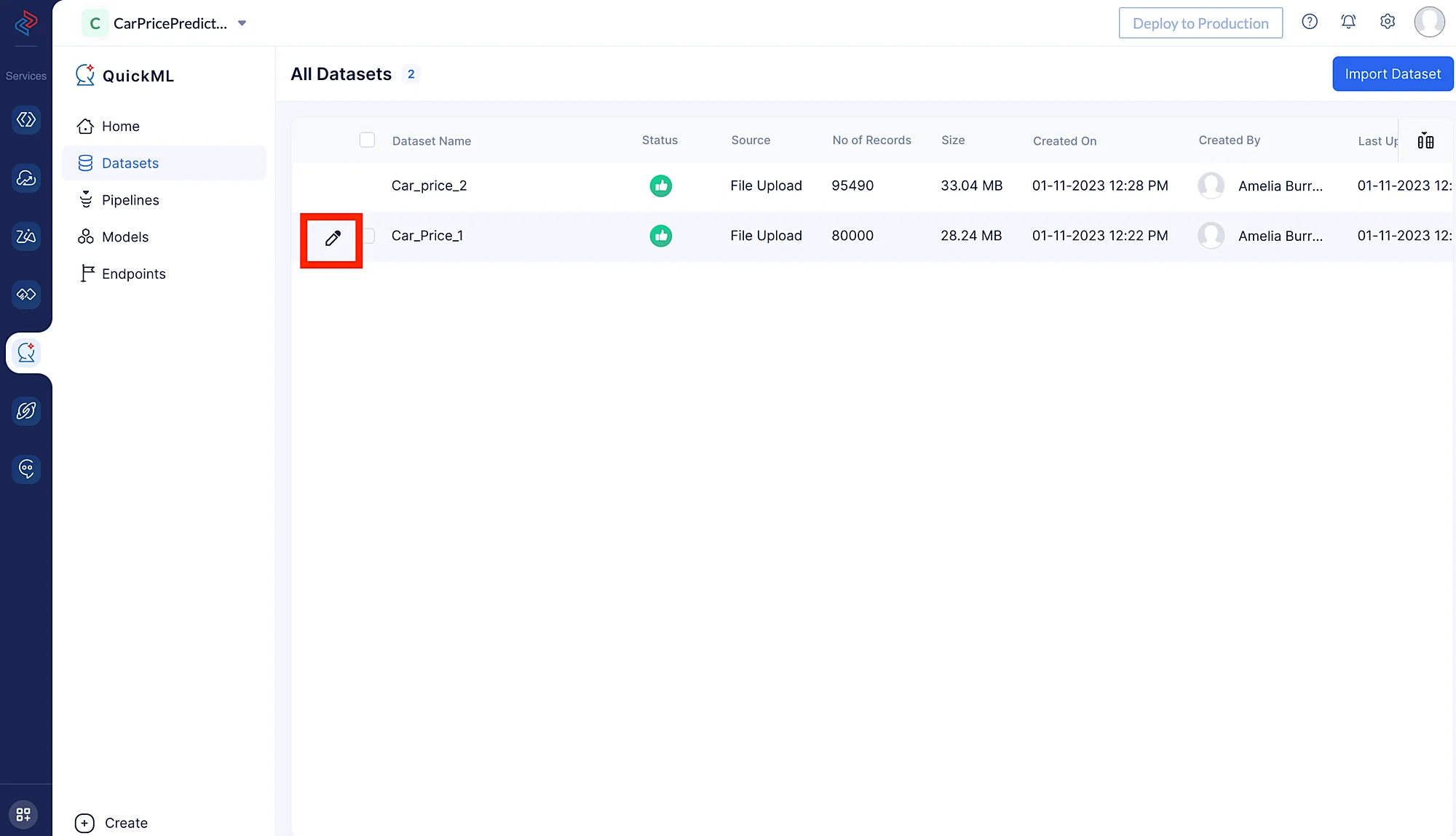

- Navigate to the Datasets component in the left menu. There are two ways to create a data pipeline:

- You can click on the dataset and then click Create Pipeline in the top-right corner of the page.

- You can click on the pen icon located to the left of the dataset name, as shown in the image below.

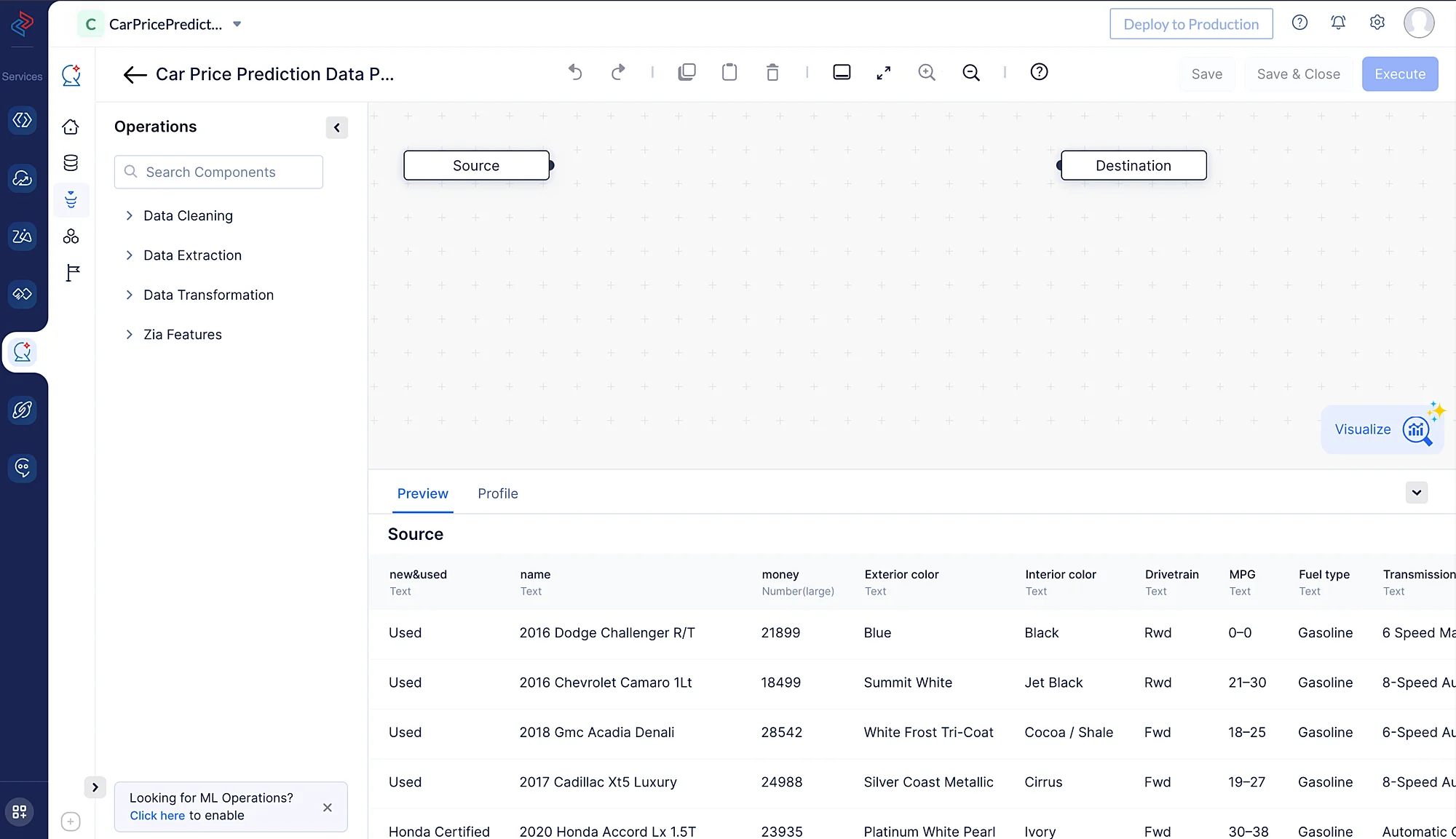

Here, we are uploading the Car_price_1 dataset for preprocessing. Car_Price_2 will be added to this dataset in the upcoming preprocessing steps.

The pipeline builder interface will open as shown in the screenshot below.

We will be performing the following set of data preprocessing operations in order to clean, refine, and transform the datasets, and then execute the data pipeline. Each of these operations involve individual data nodes that are used to construct the pipeline.

Data preprocessing with QuickML

-

Combining two datasets

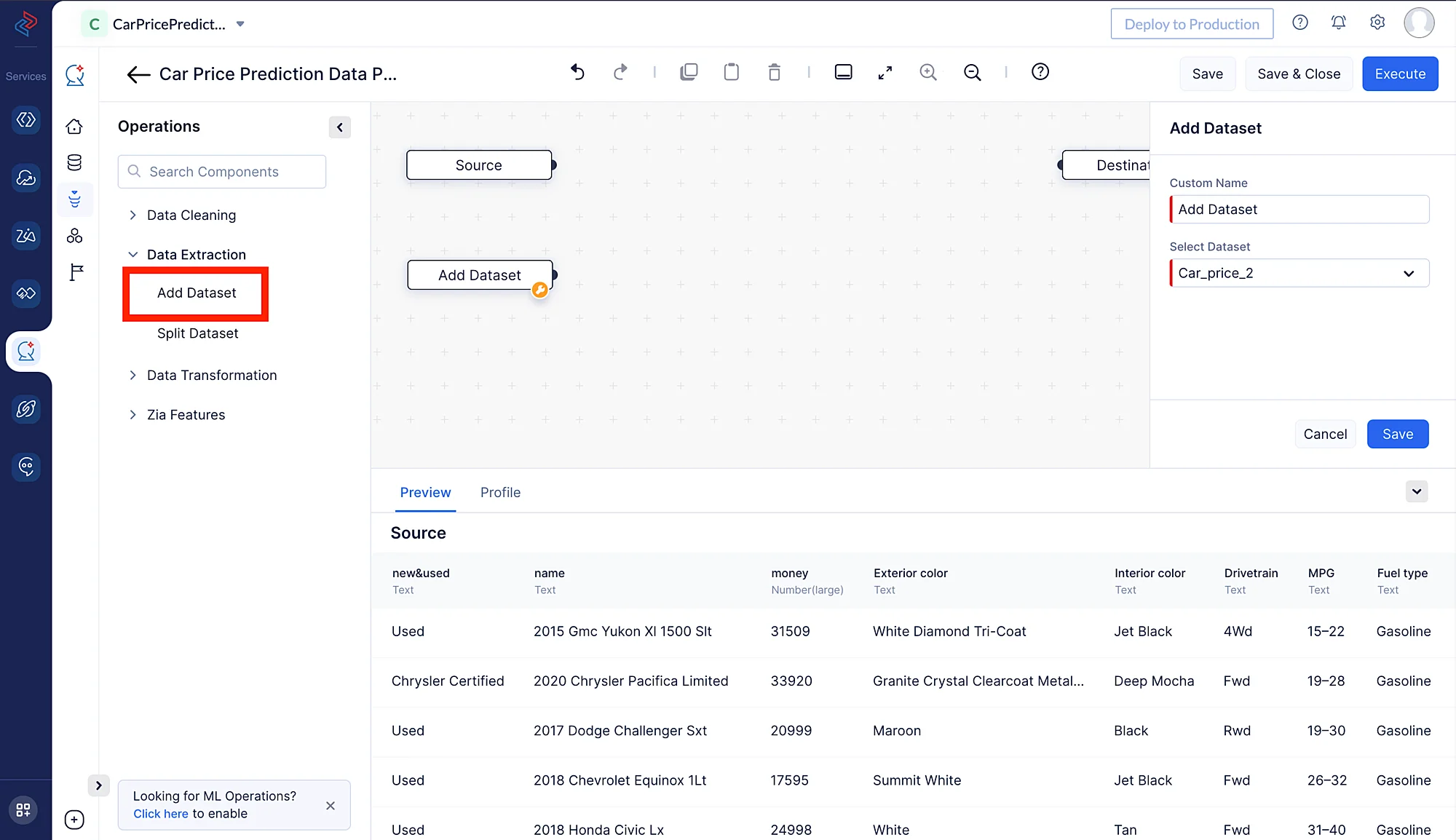

With the aid of the Add Dataset node in QuickML, we can add a new dataset (please note that you must first upload the dataset you wish to add). Here, we are adding the Car_Price_2 dataset to merge with the existing dataset.

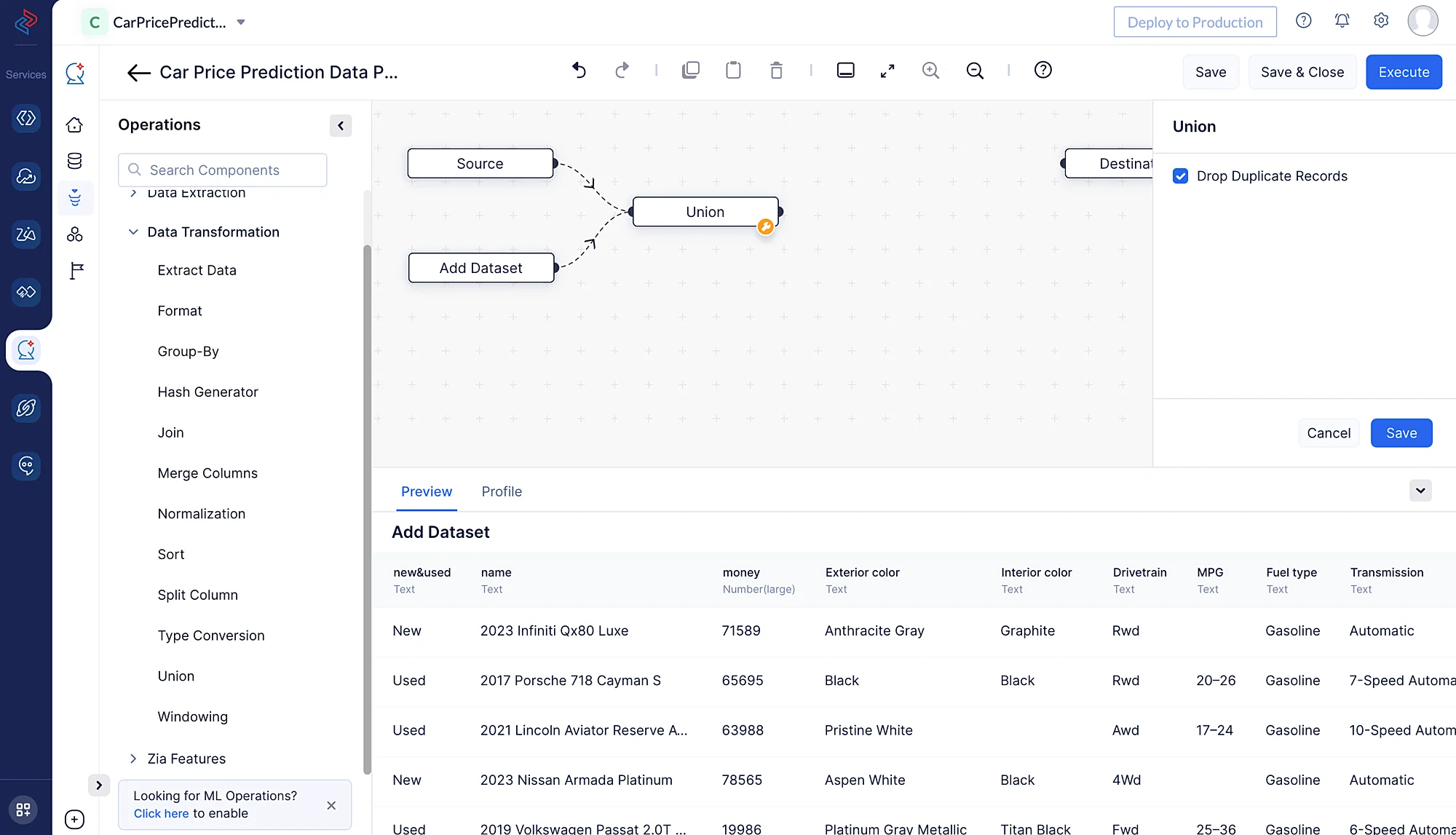

Use the Union node in the drag-and-drop QuickML interface from Data Transformation > Union to combine the two supplied datasets, Car_Price_1 and Car_Price_2, into a single dataset. If any duplicate records exist in either dataset, be careful to tick the box labeled “Drop Duplicate Records” while performing the operation. This will remove the duplicate records from both datasets.

-

Select/drop columns

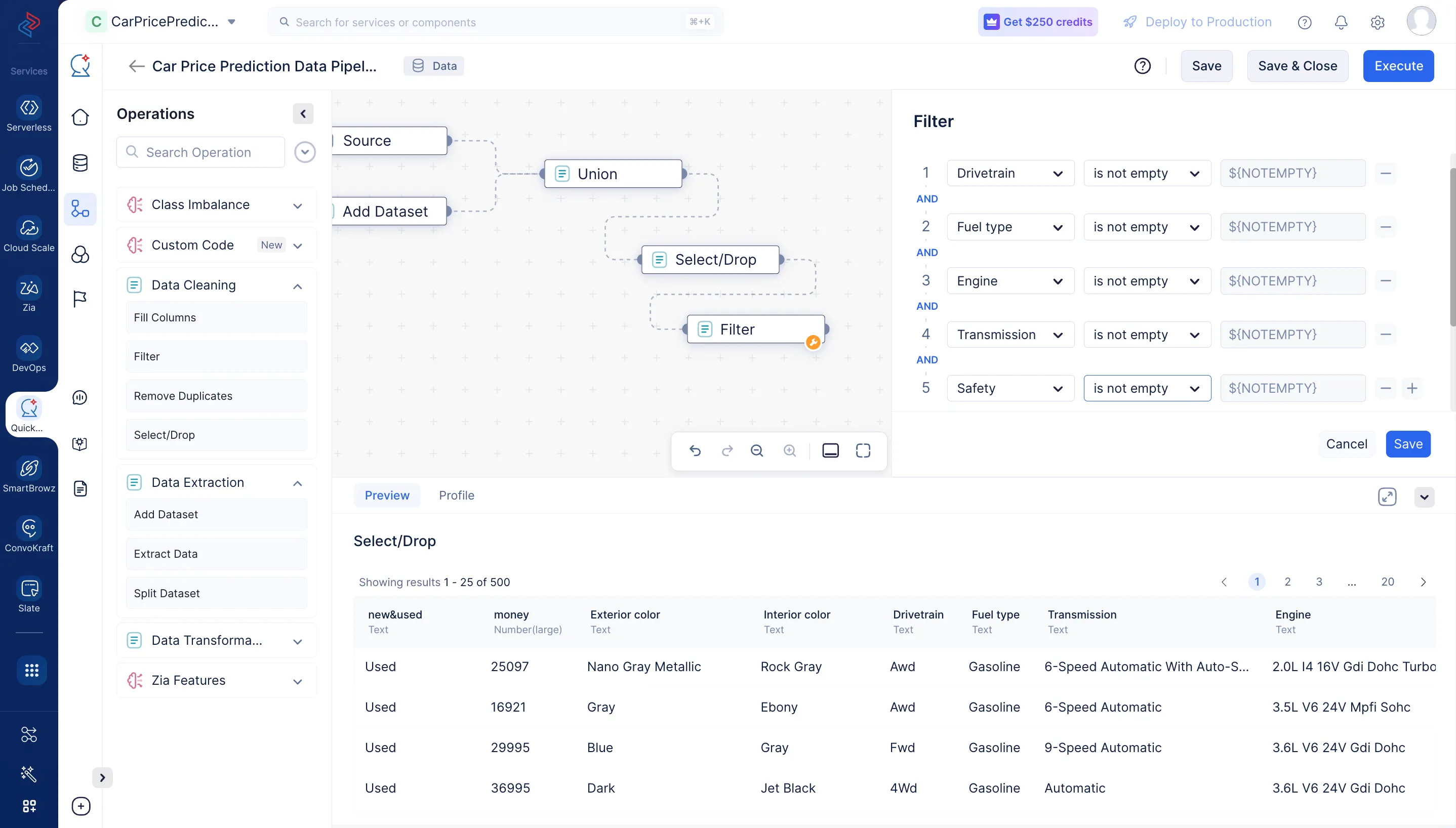

Select or drop columns from a dataset is a common data preprocessing step in data analysis and machine learning. The choice to select or drop columns depends on the specific objectives and requirements of your analysis or modelling task. The columns we don’t need for our model training are “MPG,” “Convenience,” “Exterior,” “Clean title,” “Currency,” and “Name” in the provided datasets. Using QuickML, you may quickly choose the necessary fields from the dataset for model training using the Select/Drop node from the Data Cleaning section.

-

Filter dataset

Filtering a dataset typically involves selecting a subset of rows from a DataFrame that meet certain criteria or conditions. Here, we are filtering the “Drivetrain”, “Fuel Type”, “Engine”, “Transmission”, and “Safety” columns that have non-empty values using the Filter node from the Data Cleaning section.

-

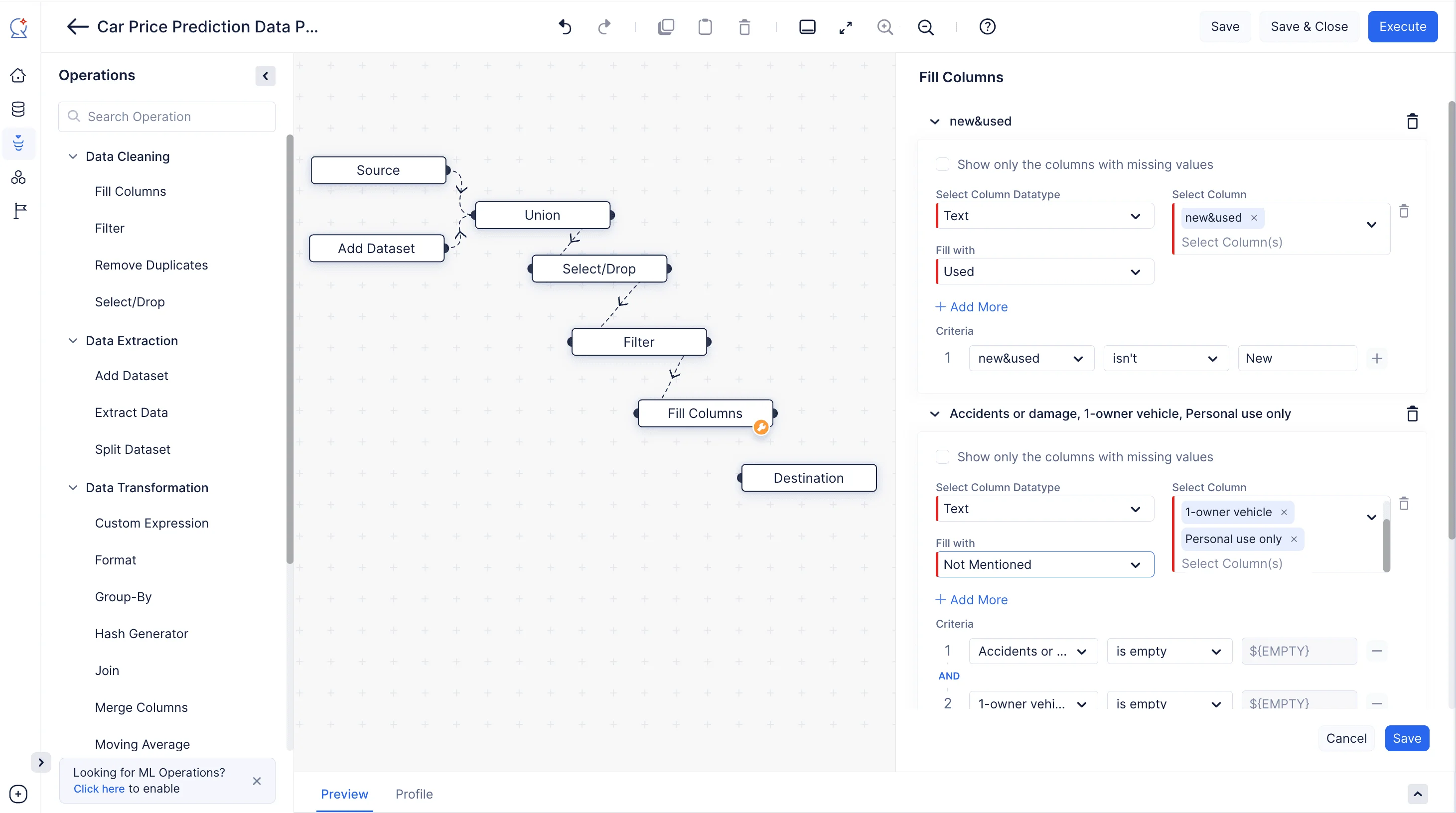

Fill columns in dataset with values

Using the Fill Columns node in QuickML, we can easily fill the column values based on any certain condition. We can fill the null values or non-null values based on our requirements. Here, we are filling in the “new&used” column with the custom value “Used” for any entries in the column that are not labeled as “New”. For columns “Accidents or damage”,"1-owner vehicle", and “Personal use only”, we are replacing the empty values with a custom value “Not mentioned”.

-

Save and execute

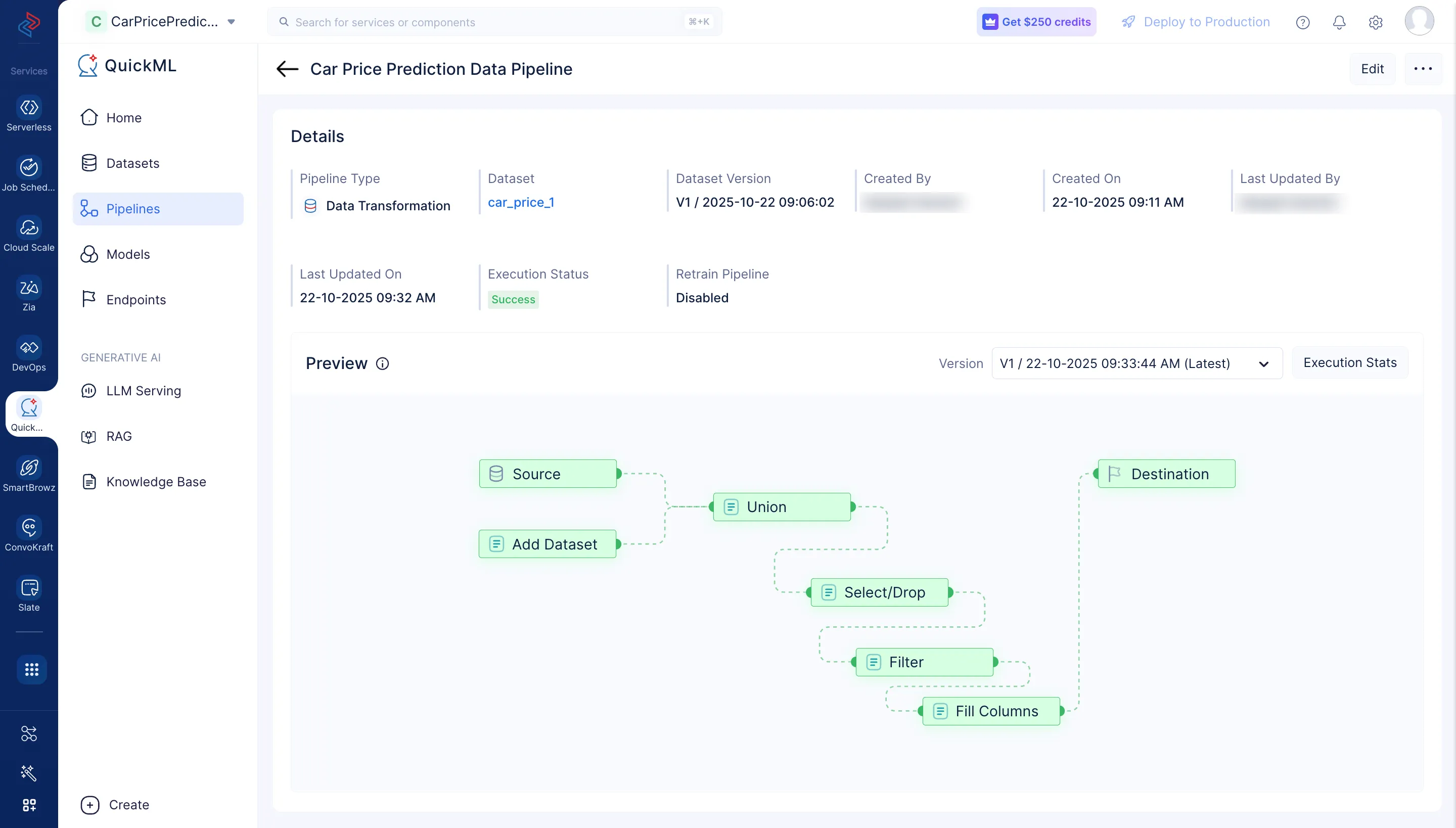

Now connect the Fill Columns node to the Destination node. Once all the nodes are connected, click Save to save the pipeline and then click Execute to execute the pipeline.

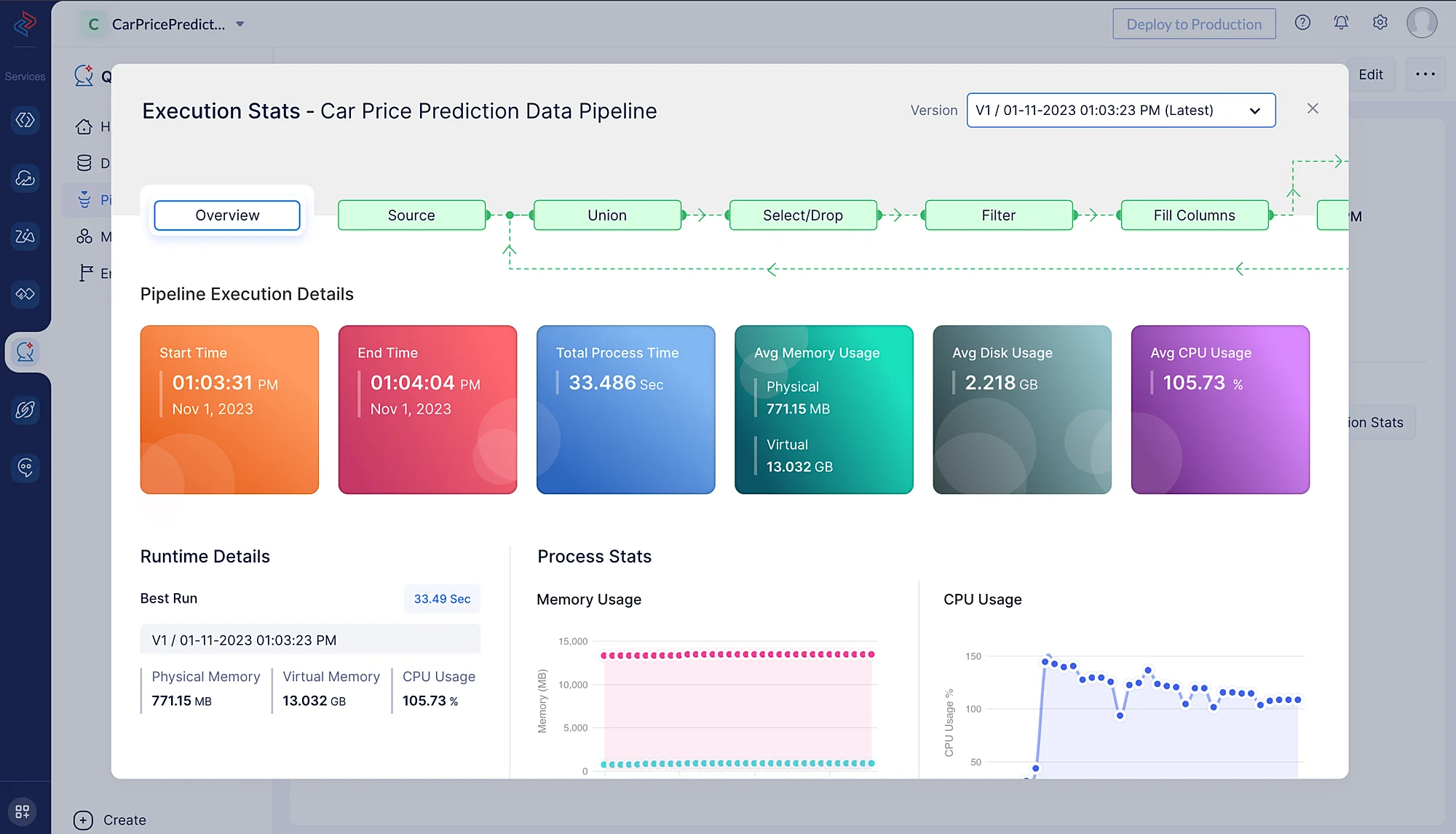

It will redirect you to a page that will show the executed pipeline with the execution status.

Click on Execution Stats to view more details about each stage of the execution in detail.

In this part, we’ve looked at how to process data using QuickML, giving you a variety of effective ways to get your data ready for the creation of machine learning models. This data pipeline can be reused to create multiple ML experiments for varied use cases within your Catalyst project.

Last Updated 2025-10-29 12:32:36 +0530 IST